Recently, I worked on a very interesting project that involved refactoring of legacy code, finite state machine (FSM), unit testing, modularization and required deliberate use of SOLID principles. After project’s completion, I realized that its deliverables can become great educational resource, so I asked the client for a permission to share the code with the community. They were amazingly accommodating and agreed.

Therefore, in this post, you’ll find a description of a complex FSM that solved a specific problem in real-world production application. I’ll explain how I designed, implemented and tested this FSM and, more importantly, why I did it the way I did. I packaged the relevant parts of the code as Android project and published it on GitHub, so you can clone it, review and even run the tests yourself.

By the way, if you aren’t familiar with the concept of FSM, then I recommend reading this post before proceeding.

Project Description

Let me start by providing a bit of context about the original project. However, keep in mind that I can’t share much details, so it’s totally fine if you won’t see the entire picture.

The application in question has many features, is data-heavy and supports full offline work. Its codebase is about 10 years old and is relatively big (200+ KLOC). My project concerned the mechanism of data synchronization between the application and the server.

Originally, the sync mechanism relied on just SyncAdapter, but, over the years, the requirements evolved and sync process became much more involved. When I joined the project, sync logic was spread across four Android Services and tens of static methods. Furthermore, the overall control flow of the sync was “distributed” among several classes that used global static variables to exchange state information.

At this point, you might be tempted to jump to a conclusion that the codebase I’m describing was a mess, but that wasn’t the case. Actually, for a project of this age and complexity, the source code was surprisingly good. It’s just that the forces of entropy, requirements changes and staff turnover naturally lead to gradual codebase aging. Integrate this tendency over 10 years and you’ll realize that no codebase of this age can be 100% clean.

The objective of my project was to refactor the existing sync logic to a cleaner state and then implement additional sync mechanism to work around one particular issue that users started to experience lately.

Finite State Machine (FSM)

After reviewing the existing code I realized that, fundamentally, app’s sync algorithm corresponds to so-called Finite State Machine (FSM). Unfortunately, this FSM was “implicit”, meaning that its states and transitions weren’t explicitly declared in the code, but were spread across multiple classes in ad-hoc manner. Therefore, with the assistance of company’s staff, I had to reverse-engineer the requirements from the legacy code, and then refactor sync mechanism to proper FSM implementation.

Long story short, these were the states of the FSM after refactoring:

enum class SyncState {

/**

* Initial state

*/

IDLE,

/**

* The app performs the first full sync after login

*/

FIRST_EVER_SYNC,

/**

* The app performs the first sync after a session start

*/

FIRST_SYNC,

/**

* Realtime sync operational

*/

REALTIME,

/**

* Realtime sync not operational

*/

FALLBACK,

/**

* The app performs full sync from scratch following "fail" indication from the server

*/

RECOVERY_SYNC,

}

Aside from declaring the states explicitly, I also aggregated the actual logic of the FSM (inputs, outputs, transitions, etc.) in a single class called SyncController. This class basically encapsulates the “sync algorithm” and algorithm’s interdependencies with other features (e.g. app’s lifecycle).

Now, imagine that you join this company and are going to maintain this project going forward. Before the refactoring, you’d have to read hundreds of lines of complex code in many places to understand how sync works. After the refactoring, the algorithm is encapsulated inside SyncController and the low-level implementation details are handled by its explicit collaborators. Furthermore, you can easily find these collaborators because they are passed into SyncController as constructor arguments. Evidently, ramping-up on this codebase and maintaining it would be much simpler after refactoring.

That said, this refactoring didn’t just benefit the future maintainers, but was also instrumental in the success of my own project. To remind you, the end goal was to enhance the sync algorithm, not just refactor it. Therefore, after finishing the refactoring and going through a full QA cycle to fix all regressions, I proceeded to the next phase.

Speaking of QA (and debugging in general), FSMs enable much more useful and actionable logging. For example, each time a transition to a new state occurs, SyncController logs this information:

D/SyncController: setState(); old state: IDLE; new state: FIRST_EVER_SYNC

So, after I had refactored the code to proper FSM, how difficult was it to introduce the new feature? Not difficult at all. Basically, this feature resulted in one additional state called POST_TIMEOUT_SYNC (tells you right away what problem this new feature aimed to solve), one new class LongSyncDelegate and a bunch of changes inside SyncController. In addition, the specifications of FIRST_EVER_SYNC and RECOVERY_SYNC states changed considerably as well and required some additional work, but discussing that would take us way too deep into the woods, so let’s ignore this part.

In a parallel universe, where I would attempt to hack the new feature on top of the original state of the code, I would need to do so-called Shotgun Surgery. This means changing code in dozens of places (some of which seem totally unrelated) and then debugging all the tricky bugs and edge cases introduced by this approach. It could take a bit less time than starting with the refactoring, but it could also take considerably more. And, of course, the resulting code would be even more complex and unclear.

Hopefully you see how implementing proper FSM for this complex feature constituted a major improvement in the quality and the stability of the codebase. Please also note that this project reaped all of these benefits even before I mentioned unit testing, which I’ll do in the next section.

Unit Testing

Even though the refactoring to formal FSM makes the logic much clearer, the FSM itself can still be very complex. Sure, there are simple FSMs with just two-three states and very limited number of inputs, but that wasn’t the case here.

Consider, for example, the following requirement (reverse-engineered from the source code): “when fallback sync is in progress and the app goes to foreground, we should let the fallback sync complete normally, and then initialize realtime sync mechanism and use it going forward. However, if realtime initialization fails, then we should keep using the fallback mechanism.” This is a complex requirement that concerns two different sync mechanisms, contains error handling specification and is also coupled to the lifecycle of the application. Implementing this requirement and verifying the correctness of the resulting logic is challenging.

But the real problem is not the initial implementation or QA, but further development and maintenance. How can you ensure that the logic you’ve just wrote won’t break the moment you implement the next requirement? If you only test your application manually, then you’ll need to basically redo all manual tests related to sync every time you change anything inside SyncController. Otherwise, you risk releasing buggy code which can, for example, enter an invalid state and prevent syncs from taking place until app’s restart (real bug found during QA). And even if you have outstanding manual QA team, you can end up in a situation when fixing one bug that they found introduces another one. This way, the app will go through several QA cycles to “stabilize” before the release. Not good.

The answer to all the above challenges (and many others) is unit testing. Once your code is covered with unit tests, you can easily verify correct behavior as you add more and more logic. In this situation, the only bugs that QA might find will reflect missing test cases, or will come from untested parts of the code.

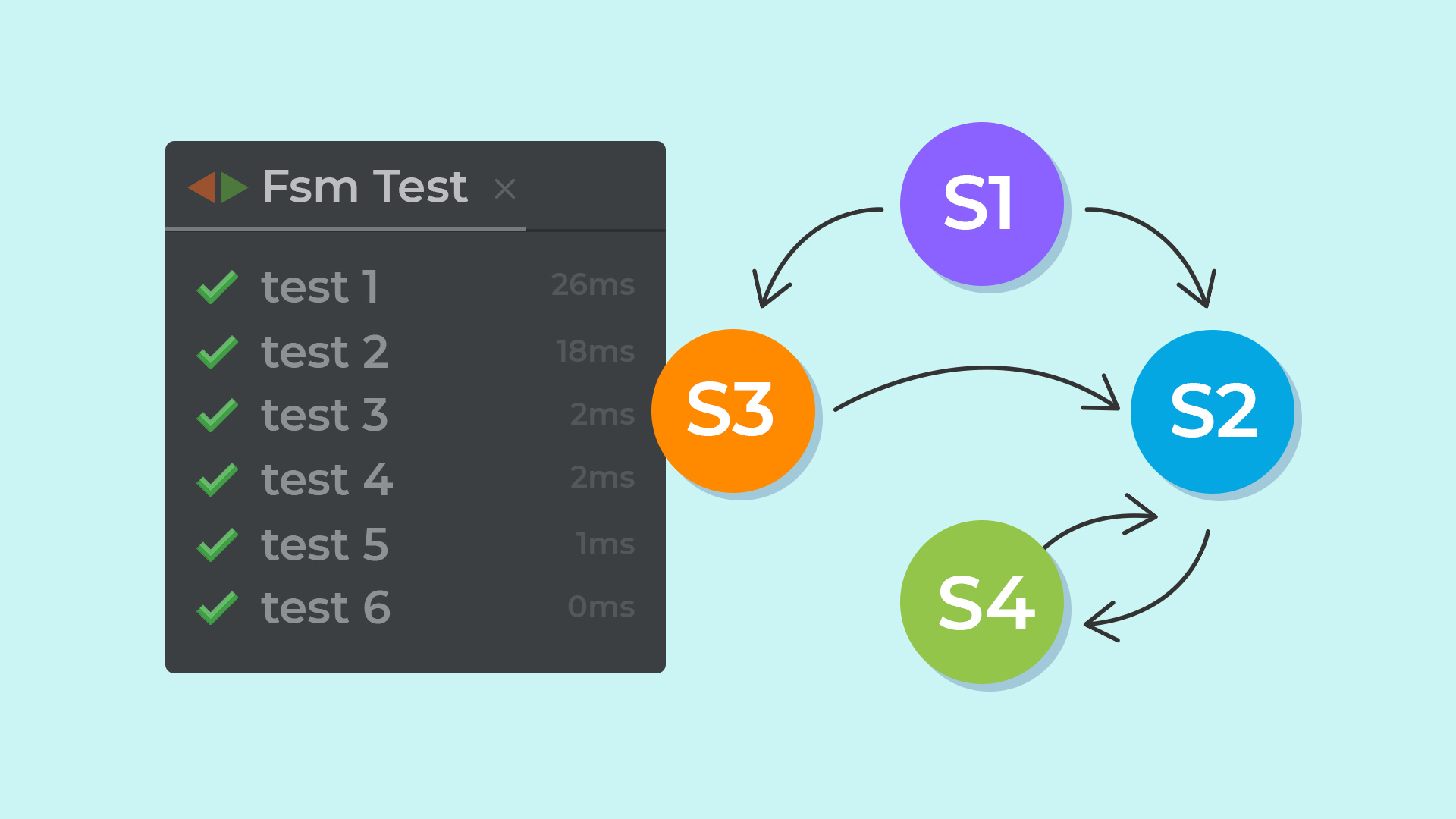

The final implementation of SyncController is covered with 71 unit tests. This might sound like too many tests for just one class (especially when you realize that there are 3x lines of test code compared to implementing code), but it’s not. In fact, I’m pretty sure there are some test cases missing from this suite because a state machine of this complexity surely has more than 71 different states, transitions and side effects that need to be verified.

Interesting technical aspect worth noticing here is that I used manually written test-doubles exclusively in these tests. Now, I don’t object to mocking frameworks in general and, in this case, I did try to use Mockk framework initially. However, this framework added huge overhead to test suite’s execution time, so I decided to drop it almost immediately. Therefore, unless I missed something about it, I think this framework shouldn’t be used in any real-world project. Whatever quality of life improvements Mockk offers, paying for it with this much overhead is bad long-term trade-off.

You might wonder: “how can anyone come up with 71 unit tests upfront?”. The answer is that no one can. Furthermore, even after the implementation, no one would be able to write 71 unit tests (let alone willing to do so). Therefore, the only way to implement such a complex state machine and cover it with unit tests (in a reasonable amount of time) is to do so-called Test Driven Development. This basically means that you add one new test at a time, see it fail, add the minimum amount of code to make the test pass, verify that it passes, and then add the next test. Sounds counter-intuitive if you haven’t had experience with TDD so far, but that’s the best (and, in my opinion, the only) way to use unit testing practice consistently.

However, TDD has a very strict precondition: you must be able to run your tests and get the results quickly. Otherwise, TDD is simply unpractical. Unfortunately, in the case of this project, incremental builds could take up to two minutes. Since waiting for this long after adding each test and writing each line of code wasn’t an option, I had to resolve to modularization.

Modularization

This is Android project, so it uses a build system called Gradle. Gradle supports projects with multiple “modules”, which are basically containers for code that can be built independently of one another. This way, even in the worst case scenario, code changes within one module will lead to rebuild of just that module alone.

After realizing that due to long build times I can’t use TDD inside the main module of the project, I decided to extract SyncController and the associated tests into standalone Gradle module. I called this module “sync” and, after getting a permission from my client, I copied the full contents of this new module into the example Android project on GitHub. That’s the main reason why it became possible to use this code as an example: by writing it in a new standalone module, I made it self-containing and decoupled from the rest of the app.

Said all that, I want to emphasize that modularization is a delicate topic, and, as I wrote in the past, can be easily overused. However, even if you don’t need to modularize your project yet, introducing new “temporary” modules can still come in handy. For example, if you know that you’re going to work on a specific feature extensively, you can create a new short-lived module while you work on that feature, and after you finish just refactor all the resulting code back into the original module. This way, you can get a considerable speedup while working on the feature without introducing new long-term modules into the project.

SOLID Principles

The problem with modularization, (or, depending on how you look at it, one of its major benefits) is that interdependencies between Gradle modules can’t form cycles. In other words, if module A depends on module B (even transitively), then module B mustn’t depend on module A (even transitively).

Therefore, the moment I had used SyncController inside “main” module, I couldn’t reference any code that resided in “main” module from within “sync” module anymore. This presented a problem because, while sync FSM is completely decoupled and independent, the side effects of FSM’s states and transitions should have propagated deep into the old code. So, how could I invoke the preexisting flows resulting in all these side effects without coupling SyncController to anything inside “main” module? The answer was: “interfaces”.

Those of you who have already looked at SyncController’s source code might’ve wondered why all its collaborators are interfaces called “delegates”:

class SyncController(

private val loginStateDelegate: LoginStateDelegate,

private val syncPreferencesDelegate: SyncPreferencesDelegate,

private val realtimeSyncDelegate: RealtimeSyncDelegate,

private val httpSyncDelegate: HttpSyncDelegate,

private val longSyncDelegate: LongSyncDelegate,

private val appForegroundStateDelegate: AppForegroundStateDelegate,

private val loggerDelegate: LoggerDelegate,

) {

...

}

What’s going on here?

Well, since SyncController can’t reference any logic from “main” module directly, it uses a bunch of special interfaces that reside in “sync” module. These interfaces are very narrow in scope and are tailored to SyncController’s needs. The implementations of these interfaces, however, must be provided by “main” module when it instantiates SyncController. This way, even though SyncController doesn’t depend on anything from “main” module at compile time, it’ll use objects from “main” at runtime.

Readers who took my SOLID course should recognize this technique of inverting compile-time dependencies relative to runtime dependencies because, in that course, I explained Dependency Inversion Principle (D in SOLID) using a very similar example. Indeed, all this “interface magic” is just DIP used to solve real-world problem.

What about other SOLID principles, can we find any of them employed here as well?

Well, note that SyncController implements the FSM, but it isn’t concerned with the lower-level implementation details of the sync mechanism (networking, data processing, persistence, etc.). Instead, these details are encapsulated in implementations of three “delegate” interfaces: HttpSyncDelegate, RealtimeSyncDelegate and LongSyncDelegate. That’s, of course, Single Responsibility Principle in action.

Then, some of these “delegate” interfaces also follow Interface Segregation Principle (I in SOLID). For example, the class that implements AppForegroundStateDelegate has more methods than this interface defines. However, since SyncController doesn’t need to know about the additional methods, the interface “hides” this irrelevant information from it.

One could say that Open-Closed principle is also employed here because I can replace implementations of all SyncController’s collaborators if I’d like to, but that would be a stretch. I mean, I can do that of course (and I do that in unit tests), but that’s not exactly what OCP means.

Last, since no part of this code is intended to give rise to inheritance hierarchies in the future, Liskov Substitution Principle couldn’t play a role here at all.

So, three out of five SOLID principles are used in this example.

Summary

In this article I explained how I used several best practices of software engineering in real-world codebase to solve real users’ problems. This approach enabled me to deliver the required new feature while also improving the overall quality of the code. The project was completed on time, even though the eventual amount of affected code ended up being much larger than I had anticipated initially. In my opinion, this only shows that, often times, the “clean” way is the fastest one.

I can’t name that company that ordered this project, but I still want to thank them for giving me the opportunity to share all this information with you. It wasn’t just a passive “go ahead” from their side because they had to actually spend time on reviewing the code and reading the draft of this post. So, thank you ,”a company”!

And, of course, thank you for reading! As usual, you can leave your comments and questions below.

Great article, Vasiliy! Thanks for putting this together.

Two quick questions about something that took me by surprise:

– Doesn’t this FSM approach look rather similar to the reducer function MVI proposes to use? I know you’ve normally advocated against MVVM/MVI architectures (yep, Google think they’re cool 😐 ), but this approach above reminded me of that.

– Taking into account the MVC implementation you normally defend, where would you use this SyncController? Would you invoke it in your MVC controller (i.e. Activity/Fragment)?

Many thanks and regards,

Hi Pablo,

I’m not an expert on MVI, but, since FSM is general and very popular technique, I wouldn’t be surprised if MVI would employ it. In fact, you can use FSMs in any MVx, not just MVI (I use it in some of the more complex MVC controllers).

As for where to use this SyncController in MVC (or any other MVx), it’s up to you. For example, you could use startSync method to initiate new sync when user clicks on “sync now” button. What’s important here is to make sure this SyncController is a global object, so that all clients get reference to the same instance of this class.